Data Resists the Five-act Form

An interview with Francis Hunger – 18 September 2019.

C: How did you start researching data systems?

In my artistic research and artistic work I have been looking at how technology and society intertwine.

My personal background is that I grew up in East Germany. So in a (supposedly) socialist country. The more I did research about that, the more I began to wonder why the technological development, and also the technological culture in this socialist system was rather similar to the capitalist system. This was one of my starting points.

Another starting point is simply that while being an artist and writer, I’m also earning my living from programming databases. So I’m pretty deeply involved with them. And I have the feeling that I’m able to talk about it in a way that is technically informed, but also from a more politically and theoretically informed researcher perspective.

These have been the vantage points.

At some point I realized that many people talk about algorithms a lot. The algorithm is basically a rule describing how to calculate something. The algorithm might be important for, let’s say Google, to be able to have fast replies to searches. Therefore they would want to optimize their search algorithm. But Google, and everyone else really is not only concerned with the algorithm, there is more. A lot of work for instance is spent to optimize databases so that data is represented in a way that is easily searchable, or that a query can be executed on it. It is because of my experience from working for actual clients and doing the actual database programming myself, that I understood that the algorithm is not the most important concept, and there are other relevant concepts such as data, information model, or query to name a few.

R: In your text Epistemic Harvest you describe the database in great length and you identify some of the commonalities between a database and a dataset. Could you describe what constitutes for you a dataset, and how it relates to the database?

One thing that differentiates both is that, as I understand it, a dataset is some kind of structure that holds data while a database provides a software to work with this structure.

So basically the database provides the means to query the dataset.

Pretty much every dataset can be imported into a database system, although it may need some restructuring. That is often the work of people who deploy databases: they get an already existing dataset, for instance one or several Excel spreadsheets, then they look into how to restructure it, to make it fit into a database system which allows a different way of querying it and so a different way of generating new information compared to just the single, plain, table.

R: In your writing you speak of the trinity of the model-data-algorithm, could you explain how you see this trinity? Which role does ‘data’ play therein and how does this role of data relate to the database and the dataset?

The ‘trinity’ of model-data-algorithm is something that I came up with, but it’s not new.

As I mentioned, I struggle with the insistence on the algorithm in media theory.

Take for instance any kind of programming handbook for MySQL (and there are hundreds, if not thousands of them) and when you just go through the first 30 pages you notice it doesn’t talk about the algorithm. It speaks about the information model or the data model. That is: how to organize the data in a way that it can be queried.

The question of how to organize data touches the closed world assumption. The closed world assumption means that you assume that everything that is in the database is everything that you know, so you can make true or false statements. The contrary would be the open world assumption, which means that if there is no entry or anything that can be matched by the selected criteria, it cannot be assumed to be false. But the predicate logic of the relational calculus in relational databases needs a closed world. So the data model describes a closed world and gets kind of broken in what has been ignored and taken away.

So the ‘trinity’ of data, the model and the algorithm goes as follows: the algorithm kind of optimises how to query data, while the information model defines it. Because of that, the information model is, in my opinion, much more important than the algorithm because the data model defines what becomes data. Everything that is not described in the data model does not become data.

So if you imagine the dataset as a table, the names of the columns create the data model: they describe what you include and what you exclude.

We can now define data as something that is described in the information model and everything that is not described there is non-data. Its not existing for the database because it simply cannot be queried.

R: Thank you. In your work you describe how each database creates its own subject or dividual, through its structuring, through its columns. How do you see the way in which the dataset is created influencing the structuring of this dividual?

Well the person who creates the dataset usually has some kind of questions they want to answer, or some tasks that they want to solve. This leading question dictates which part of reality the person wants to include into a dataset and which part not.

In this way this person makes up a mental information model of reality because they decide what to include, and thereby what to measure. This is something we also know from physical experiments for instance, that I might be looking at the temperature and density of something, while ignoring other properties, like the colour, because for my question the colour is unimportant.

When we created this mental model and when we include or exclude certain parts of reality, already a decision has been made about how to address the subjects – given your data is related to subjects.

R: In your research you also speak of the expansion of data collection as the expansion of the production of transactional meta data and you reframe the leading assumption that surveillance would be the primary drive for data collection. You even go so far as to state the focus on surveillance as being quite a self-centered perspective. If it’s not surveillance, or privacy, could you expand on what then is at stake for data critique?

Currently this only exists as an outline on my blog, but I hope to develop this question further. However, this kind of critique has not only been developed by myself: just recently Evgeny Morozov has written a longer article critiquing Shoshana Zuboff’s notion of surveillance capitalism.

Also back into 1994, for instance Philip E. Agre – who is interesting because he also was a computer scientist who then began to write on a media theoretical questions – developed the notion of the capture instead of surveillance. Recently I came across an article by Till A. Heilmann (from 2015, in German) where he writes about data labour in capture capitalism and proposes to use the perspective of labour instead of surveillance.

The problem with the surveillance idea is that it always ends up in a kind of moralistic call towards companies “Oh my god I’m being surveilled it’s so bad, I can do nothing. It’s a horror!” It actually destroys the idea that you can do something about it and that you can act upon it.

Whereas, if you see it from the perspective of how labour goes into the production of data; and how subjectivity is captured or scraped through data and then made into something that has value (for instance in advertising), then you might be going to ask further questions that go more into the direction of how to regulate this, how to work through law (and not just through moral) to define a set of rules on how to behave or how to act within data space – and this means taking a political stance not just a moralistic towards the issue.

In the book Machine Learners (2017), Adrian MacKenzie explores this question precisely for the machine learning and Artificial Intelligence ecologies. He looks into how machine learning datasets are being created and how much labour goes into it.

Also artists – Adam Harvey for instance – loosely touch upon these subjects when they look into machine learning datasets like Labelled Faces In The Wild and they investigate from whom the data is taken, under which circumstances, and how those people have been compensated for providing their data. Usually not at all.

C: Highlighting the labour aspect is a really interesting point, as it immediately brings in the question of agency and reframing the discussion around labour might open the possibility for self-organisation. The workers could organise and make their own datasets, or make their own databases as a means of self-representing and also employing the entire economic structure that datasets pertain to.

The Data Workers Union by Manuel Beltrán is a union of those people who provide data. While it’s an artistic project, they are talking about things like data ownership, digital colonialism, taxes, data basic income and data exploitation. I think this is the way to actually look into these things and to get a critical grasp on it.

R: In your text Epistemic Harvest you describe how computational capital is only able to work “when humans produce expressions that can be made symbolic and processed”1. You see the computable as that without meaning, so how does the interface interfere or function in this process of meaning making?

Can you give an example?

C: For one workshop we were working with the Enron dataset. You may be familiar with it: it’s a natural language processing dataset that consists of thousands of emails between employees of the Enron corporation. Enron was an American energy company that went bankrupt in the early 2000s. We were interested in the influence that the dataset has had in the field of machine learning. There is a certain company culture that is present within this collection of emails which struck us as a workaholic attitude. So we were querying for example emails that are sent after 3:00 a.m. in the night, or emails that talk about the physical state of the person who was writing the the e-mail, in an attempt to create distinct narratives that might hint towards the larger ones that are at play within the entire collection. From there we created short theater plays in collaboration with another artist, which were enacted on the spot. So in that particular workshop you could say we used our own bodies as interfaces to reenact the content of the dataset. And after the workshop, we made a digital interface to enable more scripts to be made.

I think that’s a great project and a relevant approach to work artistically with these topics.

Since I can not elaborate on the Enron dataset, let me discuss the question of the interface in a more abstract way, using the example of the table. To my knowledge, only a few designers have written about tables and how they create and organize meaning. Regarding the table I only found one chapter or two in Edward Tufte’s writings and then there’s another designer called Stephen Few who has published the book Show Me the Numbers and has written about tables. Except these I haven’t seen much.

Tables are a cultural technique, which allow us to bring information into a formation. They create meaning through spatial differences. You have a spatial relation, which creates meaning. This is how interfaces work because they are able to integrate graphics and text and moving image. The positioning on the screen or on a print out then determines a certain order. Here, the Foucauldian notion of The Order of Things comes in and of course, with the order also comes a hierarchy.

What is important to understand about the table as an expression of – or an interface to – data is, that as it is first of all a construction, it can always be constructed differently. And it’s the designer who decides how the construction is made, what qualities are inherent.

One important quality is the sorting or order. In the European, modernist way of recognition, things that are on top are more important; they are of a higher order than other objects that are lower. So when we sort a table differently we create a different meaning with each sorting.

Another operation that’s important for generating meaning in this interface is grouping. The natural order usually refers to the order of how data has been recorded, which is in itself an unordered situation: it’s a logic based the entrance of data. This could be completely random or in the order of some transactions that have been recorded. If you group information, you understand things differently than if you just have the ‘natural’ order of the table.

The third operation is filtering out certain rows. That is, you take out certain data, because you decide these entities are not important to show in regards to the question that you want to answer.

It all comes down to spatial logic. I have already used a few notions here. Firstly, one can operate on tables. Furthermore, a table also represents a process: the process of being filled with data. So if you would have an empty cell, then this empty cell shows either two things: that there is no value, which means the value is zero or ‘nothing’, or it shows “please fill me in with data! Please, I need more data.”

C: That’s a good point, it would be useful to distinguish between ways in which data is already interfaced, and interfaces that we would like to imagine. That applies both to methods of ordering data and methods of presenting it. For us, one way of making the dataset more legible was to create these sub-groupings based on the queries we mentioned before. The order wasn’t specifically emphasized, but the fact that they existed as a sub collection within a larger collection gave some clarity to the material.

It is really important to look into these processes of ordering and sorting because there are a lot of subconscious decisions happening that influence how you represent and how you view data. They are cultural techniques and we often forget that we are actually doing it; we take it as a given.

For tables or for any kind of dataset, classification is a very important term because again, what appears in datasets – what is taken in – was decided already in advance. And often this is also a classification decision.

There’s this beautiful book by Jeffrey Bowker and Susan Leigh Star, Sorting Things Out - Classification and its Consequences (1999), in which they also explore the notion of classification.

R: Could you expand a bit more what kind of struggles you have and how this influences your art practice?

In media arts we have seen a lot of projects that take some sort of data and transfer it into another kind of interface. For example, you enter a room, some sensors record your movement and the light in this room changes. We have seen thousands of variations of this, some of them go by the notion of generative art.

I understand it is important to explore this interaction at some point – when you are studying media arts, or just when you enter the field, you have to understand these relations. It is fascinating to see how certain data can be brought into form aesthetically. However, I see limitations and a lot of repetition in this genre. The question is always, beyond the ritual spectacle, what can be experienced and learned from that?

This was the motivation to look for something different. I am not saying that I found some real solution, not at all – I am very uncertain and exploring. There is this notion by Bertolt Brecht who said “petroleum resists the five-act form”. He refers to the point that you have to make up characters who can then have a story and something happens to them, which can be dramatised. I went into using narration and looked into how we can talk about datasets and databases and about what they do, how they order things and how they create a discourse.

For Deep Love Algorithm (2013), I invented two characters – Jan and Magda – who would undergo certain transformations in their life. There is a love story between the two. At the same time, they are researching the question of databases. Their love is unfulfilled and their explorations and the promises of databases are also unfulfilled in a certain way.

Another thing that I did, were Database Dérives. With a group of ten interested people we walked through the city and tried to explore, just from looking around us, how can we identify that there is a database involved.

For instance, when we walked down the street in Berlin we saw that each tree has a small sign on it with the number printed on it. Very certainly there is a database: all those trees have to be in some list, in a cadastre. Then we came across a yellow postbox where you could put your letters. One participant explained that inside this postbox there is a bar code; whenever the post is picked up and brought to the central hub then the person who picks it up, scans the bar code and the container bar code. So these both can be connected to each other. Again, there is a database involved. Of course we also came across an A.T.M. for which we have to identify ourselves by entering a PIN number. Providing a login or providing a pin and identifying ourselves in interfaces is also very good suggestion that there may be a database behind it.

The Database Dérive was another kind of reaction – not so narrative, yet very communicative among the participants. It refers to Bowker and Star’s notion of infrastructural inversion, the idea to record the visible ends of an infrastructure. I would see the database as an infrastructure that is hidden from plain sight. By looking at what is still visible, you try to recognize the existence of this infrastructure.

R: I really like the notion of the infrastructural inversion as a way to describe that practice. The Data Walk initiated by Alison Powell is perhaps similar to what you described, only in her version you look for how data is being recorded. It inspired us organise the Data Flaneur: we also walked the street, however we took the position of dataset creators. We came up in groups with matters of concern and then set out to gather our data through walks.

C: Do you know the work of Mimi Onuoha on missing datasets?

No.

C: You might find it interesting. There is a GitHub page where she expands on the concepts, but one paragraph that really stood out to me and I’m reading it now: “The word missing is inherently normative. It implies both lack and an aught Something does not exist, but it should. That would should be somewhere is not in its expected place an established system is disrupted by a distinct absence. But just be some day type of data doesn’t exist doesn’t mean it’s missing. And the idea of missing data is inextricably tied to more expensive climate of inevitable and routine data collection.” I really like that she phrases this missing as being something that is normative. As soon as a gap is made visible through an empty cell, there is a need to fill it, like you said before. She argues that even if something might be missing in a table, that doesn’t mean that it’s missing completely or that it should be found.

Speaking from the practicalities of table making, empty datasets or ‘missing datasets’, start the process of generating new knowledge. They start the process of questioning: “Okay, why is that empty why isn’t there something? Let’s look into it and find new knowledge to fill it in.” At least in the classical table. It has been so.

But yeah maybe I’m missing the point of the problem of normativity.

C: This text exists in the context of a larger research, of which her art piece The Library of Missing Datasets is also part of. Here she lists titles of datasets that do not exist, for example ‘Poverty and employment statistics that include people who are behind bars’. What I get from her work is that data creates a framework through which you view the world. And with the entire framework missing…

…the question is missing.

C: Exactly!

This is the sadness of a whole area of engineering that almost always just looks into solving the specific task they were given; at the same time denying their responsibility for how they solve it, and also for looking further into what could be missing, or if there are there any other questions that need to be asked. So it is up to artists and designers to look into these voids. Although in my opinion engineers are in a much more powerful position compared to artists or designers to implement and to change things. So from this perspective I think the kind of work that Mimi Onuoha does is very important.

It looks like we as artists and designers have to do the work that engineers don’t do.

Building a Feminist Dataset Together

Interview with Caroline Sinders – 22 October 2019.

R: In the Feminist Dataset project you used the format of a workshop to bring into discussion topics such as feminism and data justice. During these meetings you mapped out possible text documents that could become part of a dataset. Could you maybe tell us a little bit about how these sessions unfolded and at what stage this project is now?

With the Feminist Dataset, I am taking a critical design approach to creating with machine learning. Are you familiar with Thomas Thwaites’ toaster project? He was a student at the Royal Academy of Art under Antony Dunne and Fiona Raby and created a toaster from scratch. Part of this was to highlight its ecological properties, as well as to show the amount of material that goes into the making of this everyday object. I wanted to take a similar approach to machine learning: to analyse every part of the machine learning pipeline from start to finish under the framework of intersectional feminism as defined by Kimberlé Crenshaw’s work.

Part of doing the project is also to recognise that collecting a dataset of things that would fit an intersectional feminist framework is really hard to do and at some point it may actually turn out to be impossible. Now that I have collected enough data – even though I am still holding workshops to collect more data and make the dataset a bit more diverse – I am moving on to the next step, which is analysing labour around creating and training a data model. Developers and researchers use platforms like Crowdflower or Amazon Mechanical Turk. But neither of those systems is really feminist or equitable. Can creating a data model ever be a feminist practice?

What can you do to transform that labour to make it a feminist act? Some of that is thinking about what a labour union for Mechanical Turk would look like. Can we create a version of Mechanical Turk that is feminist in practice? How do we do that? That is the next step of the project.

R: And could you briefly describe the structure of the Feminist Dataset workshops?

A lot of it was just sitting down and talking about data, what it would be in this context, finding it, and then planning how much of it you need for the dataset. We would talk about how that would fit, or if it would fit, in the dataset.

R: You have been doing these workshops for a few years now. Have you noticed a change in attitude or familiarity with the with the topic at hand in the audience?

Not necessarily, as I did not hold the same workshops over and over again in the same place. Often we would have a really intense dialogue about what would constitute data. We talk about what we would like to see in the dataset. We end up having a much deeper discussion around how work is indexed on Google. And how you find written pieces of work by feminist creators when a lot of that work is not saved or it is not well archived. It ends up being harder than people think it is going to be. Having a discussion around that is a part of the project: it is actually hard to find a lot of this work because it is not saved or elevated or treated in the same way as other popular works. So even trying to find a lot of work that is inherently feminist is in and of itself a hard thing to find which then makes the act of finding it a feminist act.

R: Feminism itself has many facets and many versions. How do you define feminism within this project?

A feminist dataset is an intersectional feminist project; intersectional feminism has a definition and a specificity to it. Feminism itself can be very broad, but intersectional feminism is about placing marginalized groups, trans people, people of colour, at the center of feminism, it is not cis white feminist anymore. That is how we are defining it.

It is looking at how things like race and gender deeply intersect. Because feminism is really broad, it is interesting when you start to think about what makes a text feminist. If you are a feminist and you write about math or creative coding, would that make the creative coding text itself feminist? No, because it is not written from a perspective that involves feminism. But if you were talking about the gender balance inside of coding then that would be a different kind of article.

What makes an article feminist often does not mean it has to use the word itself, but it has to be addressing or covering something specifically. Does that make sense?

C: In the preparation work for machine learning models, a lot of the tagging processes or the in-between processes that lead to the making of a model function on a binary basis: whether something is or is not a certain property. One of the questions in your call for feminist data is how to quantify without binaries. In the discussions that you have had within the workshop how did you approach this?

That is part of the hard thing, in the manifesto it is something we aim for, but it is not quite possible now. Part of the quantifying without binaries is also in and of itself a play on words of trying to move beyond binary gender. But you have to end up quantifying things inside of a worksheet or a spreadsheet when you are working with machine learning. So there is that push and pull.

C: In one of the articles that you wrote for Schloss Solitude, Building a Feminist Dataset, one of the stated aims is that the creation of the feminist dataset is a means to combat bias. But bias is sometimes so pervasive that it is difficult to spot. How do you deal with possible biases being built in?

That is one of reasons why I look at how old the work is or where it comes from. The subject matter of the work and who wrote it is really important. That is also why right now the dataset is pretty biased. But a lot of it is just within the feminist dataset itself, there is no large repository of feminist works that you can just use for machine learning.

The creation of the dataset works to counteract conceptual bias by being easy to access, indexed on the web. But then what is the bias in the dataset itself? That is what I am working on to unpack more.

R: What is your current experience with that?

Not all feminist work is intersectional. We are not taking second wave feminist work, which really centers a cis gendered white woman. But for example should a dataset called The Feminist Dataset have writings that are only published by major publishing entities or that are written by people who are located in the global North? Just by having a feminist dataset does not mean it is actually a diverse body of feminism. So a lot of the work is to look at the topic of what they are writing about. Also, you will have to look at all of the creators inside of the dataset: where do they live, where did they come from, what languages do they speak, what are their mother tongues, how old are they? The majority of the creators in the dataset that I am referencing are cis gendered women who live in Western Europe and North America and were born between 1960s and 1990s. Is that a diverse dataset or not? That is what I am unpacking right now, the bibliographic and biographic information and how that affects the dataset.

R: Is the discussion that you have between yourself and the participants reflected in the dataset itself in some way?

I am the person that ultimately gets to decide what is in the dataset, so it does get reflected. We have conversations with all participants and this is a major topic. Then, ultimately I have to go and back-check work just to make sure that it does fit within what what we are aiming for.

R: How is the dataset structured? Is there a specific tagging with additional information that provides an extra layer of information to the text?

We have not started tagging yet. A lot of this work will be to look at what all the different subjects are, who the writers are: looking at the age, the race and the location of the author. Then we also look at the subject matter of what they are writing about.

R: Can you already tell us something about the feminist AI that will be trained on the dataset?

I want to make a chat bot and then imagine a feminist UI: what would that look like and what would be the conversational flow?2 The way that the public thinks of AI is as a humanoid interface. That is not quite what they are. A lot of people expect the output to be something really specific or interesting, whereas the output could just as well be similar to what we have already seen. For example, with models that auto-generate poetry, it is not that different from the poetry itself per say. The actual output that we will see will be very reminiscent of the kinds of texts that are in the dataset. It is still an interesting output and the process of doing it is fruitful.

What is different about this project is that it is about process and taking the steps to make it happen. I feel like the process of machine learning often does not get questioned or interrogated enough. It is taken for granted. It is worth looking at who makes our tools, what are the things that go into them when we make art or products for companies.

When I will get to the point of making a machine learning algorithm like I want to do, it will be a scary step for me personally, but it is also what I am intrigued by. I probably cannot use the majority of the big tools that are out there right now. So what can I do with the tools that do exist? How can it be sort of intervened within? What kind of steps or tests do we need to run? What does an auditing session look like? I will have to look at what is lost when it gets wrapped into an API. When I use a natural language processing algorithm, what will it look like? What are the faults in that? Can the model ever be stripped back to an extremely bare minimum? Will I have to create my own? What is the process of creating my own? Who do I partner with? And how does that partnership reflect intersectional feminism? Those are a lot of the deeper questions that I have to answer during the very last process to then finally make this artistic chatbot.

C: While you were creating the dataset, did you envision situations where it could be used in ways that go against your intentions or those of participants? What could be ways of preventing that?

One thing I have thought about is creating a fake Creative Commons license that says this is only used for feminist interventions or needs; knowing that people legally would abide by that. I feel like that would be a stance. However, I do really struggle with it as I have a lot of friends who work in machine learning and it is hard to think about how your work will be used and misused.

I wouldn’t like it very much if the alt right wanted to make an alt right data model and they used my research as a way to audit, or a way to test the proficiency of what they were creating. Nevertheless, I know that that is the thing when you put something out there under an open source license, you cannot stop that.

The feminist dataset is also pointing out that just because you are using the dataset that does not mean it will work for you, because, again, feminism is a broad ideology. Someone asked me a question – I think they might have been trolling me a little bit – do you expect everyone to use this? Is it a solution? Well no, it is a technical prototype project that is also an art project.

If someone is trying to pink wash their project by using a feminist dataset, it is not really going to help them because the dataset does not fill in the power of a creator or the ability to interrogate your own dataset and QA it. No dataset is ever going to fill in those gaps that a thoughtful or ethical creator can fill in. That is a thing I try to point out: just because this dataset exists, it does not mean it is a going to help Facebook be a better entity in the world. I do not think any dataset can do that, absolutely not.

As for how it can be misused, anytime I try to think about how it could really be used to harm, I often come back to the same questions. I think all creators should put themselves into situations of how you want someone to play with what you have created. Ask yourself the question you think people want to ask when they are playing with it, in the sense of: do people see themselves reflected fairly in the dataset? I mean, if someone asks a question to the model about a trans experience, like a trans femme or trans masculine experience or non gender binary experience, I need to make sure that the outputs are not hurtful or offensive.

Just because I have a feminist dataset, it does not mean that the output will not be hurtful. We are dealing with stories, so one line of poetry that could be about someone contextualizing their rape. That line of poetry may be shifted and changed from the training, influencing the output. I know that this is a really gnarly, wicked design problem I am dealing with: a lot of feminist texts are talking about really difficult and traumatic experiences. The traumatic experiences have context, so where is the context then within this?

I may have to have a very designed output and I may have to figure out a way to make sure that text appears as a quote almost when it is describing something, so that the context is not lost. But then how can you surface block quotes out of the data model?

Imagine, if you are baking and you are making cake batter, you want it to be really smooth, right? You do not want there to be any lumps of batter stuck together and create these weird balls. In my data model, I may actually want those things stuck together, I may want the cake batter that has not broken apart yet, because that may be the context of a very important story.

That will be the next iteration after the data model training, after making the ethical Mechanical Turk: how do we actually not break apart major parts of context?

Finding Patterns in Omissions

Interview with Mimi Onuoha – 22 October 2019.

R: We found your work through the The Library of Missing Datasets project and we were curious to hear more about it. Is it an invitation to bring the missing datasets into existence or rather to expand a certain discussion that needs to happen at a larger scale? Or is it perhaps both?

That particular project began in 2015 while I was a fellow at the Data & Society Research Institute. I began by first making a list of missing datasets. But very quickly after making that list, what became more interesting to me was thinking about the patterns of absence, or the reasons why the data is not being collected. I tried to flesh that out and find the pattern of the missing datasets.

Then there is the second part of the project, which is speaking from a more social and technical point of view. Particularly in 2015 and 2016, whilst I was talking about the project, I found myself coming in contact with groups who actually would respond very positively to it and would come and tell me that they were missing a particular dataset and had not thought about it that way, so now they had a language to be talking about it.

The main example that I use, because I can talk in public about this one, is one where I worked with a group of Broadway performers who thought that they weren’t getting rolled at the same rates as some of their counterparts. They are all Asian American performers based in New York and they realized that there was no data around the race or ethnicity of people on Broadway and people who were in the theater industry. However, that same kind of data is gathered when it comes to the audience because it is important to know which shows get booked.

They realized that they wanted this data, so they started collecting it themselves. By the time I had heard of them for the first time, they had already had so much of the data question solved, but they were still looking to tie it together, so I joined them. At first I tried to help them see what was important to them about the missing data. Then I helped them through data analysis; I took the data, cleaned it up and created a number of different visualisations. From that, some conclusions were drawn that they could use when they were talking about it.

We wrote an article about it in Quartz. They also organised a huge event here in New York for the theater industry where they shared the output of their reporting on the collection. So this was a very straightforward process: we were missing data; we got it; we made it work.

Then there is the third aspect of the project: a series of artworks, which are all part of the project The Library Of Missing Datasets. These I really appreciate because they allow for more nuance. This brings us back to the question you asked: am I trying to fill all these gaps? And the answer is no, not at all, because not all of them really need to be filled. That would be too simplistic. What I’m more interested in is thinking about what the gaps mean for this political time, when there is so much currency to be gained from having a dataset, plus the financial machinery to use it to create some kind of heuristic field that gets to decide who gets this and who gets that. This third part pulls out all of these complexities: some things should be known, others should not. It could be more useful not to know about them.

C: One paragraph from your writing that really resonated with us is “The word”missing" is inherently normative. It implies both a lack and an ought: something does not exist, but it should. That which should be somewhere is not in its expected place; an established system is disrupted by distinct absence. Just because some type of data doesn’t exist doesn’t mean it’s missing, and the idea of missing datasets is inextricably tied to a more expansive climate of inevitable and routine data collection.” It is a fantastic paragraph and we were wondering if you could elaborate on this balance between being included and being left absent. How can missing data be an interference in the system, but at the same time without creating gaps that can harm segments of a population?

Yeah. That is a really good question and I think it gets at the heart of the tension of the project. For the project I collapse a lot of different definitions that you could think of for missing. Some of the things that I include as missing datasets are things that are not really missing, they are there, it is just that the public doesn’t have access to them – for example foreign surveillance by the US. The information is there, it is just difficult for everyday people who aren’t part of the highly classified governmental system to get access to it. That still counts as a missing dataset for me.

But then I have intentionally put in some datasets where the fact that they are missing is really useful to some people. In fact, any missing dataset is useful to some group.

But typically in the case of missing datasets, the people who benefit from it are those who have the most power in the situation. People who are situationally disadvantaged don’t benefit. In that Broadway example, they came forward and I talked to them. They were missing this data and they wished they had it. And it is in the interest of the people in this industry who have more power for that information not to exist.

Then there are these moments where people who have less power in the situation, will use missing datasets as a source of obfuscation or as a tool to extend their own allowances. The example that I really like to use for this is extremely U.S. centric (I’m very sorry!). In a lot of different parts of the U.S. there are towns that have municipal ID cards. So you have got your federal card and then your state ID card. On top of that, some cities and towns will have their own ID cards and often they are make it easier for undocumented people to have some form of identification. Cities take different approaches to those.

Some cities like San Francisco and New Haven, when they collected the information from people to create these municipal I.D. cards, and then they immediately would get rid of it: they would intentionally make sure that the information wasn’t stored. I should say, this was before our current administration was in office, this was under the Obama administration. They knew that if things were to change, there could be a chance that the federal government could say they wanted the information for whoever has these municipal I.D. cards. Because once you have that information recorded, it is pretty easy to check it against other forms of identification and see who the undocumented people are.

The discarding of information was a means of protection. They realized that this is a vulnerable population and having this data could make things more challenging for them. That is an example where data is missing, but they thought really carefully about why they don’t collect it.

And so, when I talk about missing datasets, I include all of those things intentionally, because what I want to show are the rhythms of a system that is nowadays so very deeply dependent on data and data collection. I really want to show what this looks like in all these different ways.

R: It is great that you have these examples of people that you actually worked with. Do you have any particular ethical considerations when it comes to collaborating with groups or communities?

When you are coming from an academic institution, particularly from the social sciences, if you are going to do any kind of work with any population, you have to fill out this IRB form where you lay out all of the harms that could be involved. You have to do all of this work to make sure that it is clear what exactly your research is giving to the world; how it contributes more than it takes away from whatever group you are working with.

Obviously, in the arts, nobody does that. There is not that same focus. In some ways that gives some latitude, but at the same time it makes it more complicated to think about what it means to be doing work with other people in a way that is responsible. I think about it a lot because quite a bit of my work has involved working with people, which is always way more challenging and complicated than working with a dataset. The stakes are much higher by far when it comes to working with people.

The model that I often use is about accountability: who am I accountable to? And in this situation what am I accountable for? I think a lot about power dynamics. Right now I am trying to work with a particular group and something I keep thinking about is that I am the one initiating the project. While we are working together, I am the one who will go on and talk about it. Similarly, when I was working with the Broadway performers, I told them that I was doing this for free and they really struggled with that. I kept telling them they think it is free, while it is not. The particular transaction that they were thinking of is a financial one but in fact we were having a different kind of transaction: I talk about this work and that is enough for me. In a way what is nice about that is that it mirrors the work that I do in trying to point out these relationships and transactions that happen and are difficult to see. That was yet another one of those moments.

I do have some ground principles for myself. Overall, I would say it is difficult to give a rule for everyone. But as I said, I do often think about my own positionality and that of the people I am with and what people walk away with. With the Broadway project, for instance, I knew they could walk away with something that they could use in their own work and build off of. That for me is a really good model for collaboration. When the knowledge feels extractive, when only one side is taking from another, as opposed to both sides benefiting and giving to each other, then that is how I know it is not really a relationship that I should be participating in.

C: If the choices that are made while building datasets, for example the properties that are selected, can be seen as a type of representation, how could self-representation play a part in this?

A lot of work I have done is trying to assert the idea that data collection is a relationship. Once we think about it as a relationship, we should be thinking about those different groups that are represented. Often I split that into the groups who are collecting the data, who are the subjects, and then the groups who are having their data collected, who are the objects.

In my early work in particular, I was trying to sometimes join those two groups and make those be the same group or make that relationship extremely explicit. In many cases now, that relationship is implicit, there are many layers between using an app on your phone and then seeing what data Facebook is collecting from it. Seeing that as a relationship can be very difficult to do now, so I was trying to join those two things together and make it a little bit more obvious. It brings questions of control, power and sovereignty to the forefront. Once you are aware of that relationship, it doesn’t feel so abstract or removed.

It has been a really wonderful exercise to have lots of projects that do that merge. Now that we are in this post Cambridge Analytica moment, there seems to be a more widespread understanding that companies and entities of government are collecting data about us, and what that means. The way that I think about this is again about control; Who has access? Not just to the datasets, but to knowledge about them, such as what they are used for? Who is able to say that they do not want information to be collected anymore?

R: Related to the question of who is in control, do you see your work as a way to get more people actively involved in data collection?

It is funny you say that, as I really don’t see my work as a way to get people more involved in data collection.

There are a lot of groups that do data collection right now, who fly under the radar and who don’t come up in a lot of common conversations. In particular, I think of human rights groups and countries who do this really painstaking work of collecting data around, for example, war atrocities. That is one view of data collection. Another one is to consider the sensors that collect physical data and the people who use that for something. There are all these different kinds of views of what ‘data collection’ entails.

Rather than saying that I want more people to be collecting data, I am in the camp of saying that it should be easier for people to get access to an understanding of what their data is used for. I think that distinction is like the difference between saying that you want everybody to be a car mechanic, as opposed to making sure that people know how to fix a tire and have them realise that is easy enough for them to fix the vehicles they depend on. I am not saying that I want all of the onus to fall on individuals, but what I want is to have greater systems for protection and accountability around data collection.

I try to do work that operates on multiple levels. One level is to work specifically with some group in a particular context with what they need. Another one is seeing it as a provocation of imagining what the data would look like. Then yet another is an aesthetic aspect to this: it is not just the argument, it is also about how you feel.

To talk about data and data collection can feel quite abstract and removed for a lot of people. But what I often want to do in my work is to show the ways in which this is like so many other processes, a very routine thing. The fact that it doesn’t seem like it, is part of what makes it easy for people to not be involved in these discussions.

R: How would you see the relation between artists & designers and the engineers that create and work with these datasets? Or to put it differently, what kind of impact can artists and designers have in the design of datasets and how they are presented?

Last year I worked in an engineering school. What was really different about being at an engineering school is that it was very clear that a lot of the students were going to work for tech companies, software companies, and they were going to make a lot of money. They were going to help create a lot of these systems and that brings its own set of issues. A lot of the students there were concerned about how they could do that work and not do it in a way in which they were exploiting anybody.

But it did strike me how for a lot of them engineering is perceived as something very valuable. For the most part, also being a designer in corporate spaces seems to get perceived as something mostly valued. Not quite as valuable as engineering, but still somewhat valuable. Being an artist however, is the least on this scale of perceived value. People are say things like “Oh OK. But what are you doing.” People really feel art is the icing on the cake.

For me, it is hard to make sense of that because in my head the three are very connected. They are all just different ways of engaging with the same topics. To me, anybody in any of those fields can have quite a large impact on the ways in which people think about any of these issues.

R: In Us, Aggregated you work with closed platforms that obscure a lot of their working. We encounter this a lot when working with datasets and machine learning practices. Could you describe how you set about and structure your approach when working with this kind of platforms in your pieces?

I don’t know if I think about it very differently. A lot of my work has to do with this idea of obscuring – things that are missing, things that are lost. Data is interesting to me because it is artifact driven: making sense of it is very much about what you see. It does not always get at any of the levels below that.

I come to all of these projects not from the binary of bad or good, but looking at the affordances that a particular software creates. What are the ideas that it creates that are easy for us to miss, or that seem so obvious and so fundamental that we never have to question them? I like to take that stuff, that is on a more hidden level, that we don’t really question, and find a way to put it on the table. We can then have a look at it. Suddenly, we cannot say anymore that we did not know this. How does that change how we relate to it? This is a process that is constantly happening.

This brings me to Us, Aggregated, which is about how these things that we use every day shape the way in which we understand the world, but without us thinking in what way they shape it. Can that be good or can that be productive? Is that something that we actually never want? Does it give us a strange sense of belonging to be classified by these systems? How do we make sense of these in a way that goes beyond knowing that this is essentially bad? So many of these technologies are now in the fabric of our lives and it is not so easy anymore to say that something is just all bad or all good.

Glance versus Gaze

Interview with Nicolas Malevé – 9 January 2020.

R: We saw some sneak peeks of your PhD research through your talks at The Photographer’s Gallery, or the workshop with Algolit.3 In your artistic practice, you do not only look at datasets from an academic perspective, but really work with them. When you have a dataset that you find interesting, how do you go about exploring it and seeing what is there? What methods or tools do you use?

I think that would be my question to you also!

The first question is about finding the datasets. Because the questions of how to look at them or how not to look at them are depending on how you find your way to them. For instance, I discovered visual image datasets by learning to program computer vision applications. It was, let’s say 2003, when I was running OpenCV sample programs. Although that was already a machine learning algorithm, the Viola-Jones, the question of the dataset was not even present: when you would look at the OpenCV tutorial, it would not talk about training.

I had bought this book and it only mentioned how the training was done in the appendix. There were a few scripts that you could find in the documentation provided by OpenCV. But in general, the algorithms that we used were for detecting contours, colour, background, foreground, segmentation, and all of them worked without having to learn from other images. So datasets were there, but you could simply ignore their presence, because they were mainly used for a specific application like face detection. For the rest it could be done with other means.

My encounter with datasets came from working with Michael Murtaugh on the archive of Erkki Kurenniemi, who was a musician, but also a very obsessive archivist of his own life. He had a lot of tapes that he had recorded that were either ramblings or field recordings. The term dataset however, was not there at the time.

We had made this radio and were thinking how we can detect moments when there was silence, when there was a voice, and little by little to see how we could detect speech. Speech detection was something where training the algorithm on the voice was really important. So my first encounter with datasets were people reading sentences for training speech detection.

Interestingly, I do not know if it is because speech detection was more advanced or if just I entered the field from there by chance. It took me very long, actually, to realize that in computer vision the same techniques were taking place. Because for a long time it looked like you could do a lot of things without necessarily large amounts of images. Due to that, today I am always conscious of the fact that there are other options. I mean, you cannot do everything with other techniques, but they are there and somehow they are strangely out of fashion.

I had a discussion with somebody who is the director of a computer vision lab in Switzerland. She said that for three years, when deep learning became the standard, she could not really work. She had to learn the technology, but that was easy. The difficult part was that they simply did not have the means to operate at that scale. When you do not have this infrastructure, you are disconnected from this kind of practice.

Another thing I think is important to mention is that I never produced a dataset. That is really weird when you think about the importance the dataset has when you use any kind of detector. I realized I spent five or six years programming things with computer vision and never really assembled anything remotely resembling what you would need for training a face detector or something like that. In terms of how datasets are commonly approached, it’s interesting that they are never seen as being part of the production, only acquired or found. I would like to to see what it means to assemble something at such a scale, but that would be a whole project in itself.

I actually work with datasets either unknowingly – because I am using something that is pre-trained – or out of curiosity. For instance, I used the Viola-Jones algorithm for face detection for a long time. At some point I realised by looking at the code samples that are given for training that there is a way to visualize the faces that were actually used for the training of the algorithm. For me, it was a very moving moment to see the images: they are 20x20 pixels big and you can go through all the faces.

Then you realise that what the algorithm uses for training is actually extremely abstract: it is black and white, quite brutally cropped images, with nearly no detail. But it still catches something from the configuration of the face, which is quite surprising. First, you have the dataset and then you have what is in the end used for training, which is not necessarily exactly the same thing; the original photos have much more detail. To make these images operational, you need to follow a series of steps that alter and make them function very differently. If you look at them as representations of people, they are really poor representations. But as something that makes software operational, they are quite efficient. I mean, if I had these hundreds of images and they would be the only thing I know of a face, as a person would I recognize the face? Would I be able to make sense of anything with this little amount of information? It is really surprising that it works.

C: It is true that maybe five or six years ago there was much less attention given to datasets. I think one of the reasons for that is that as these algorithms got picked up and used in more pervasive ways, the errors that they made became increasingly aggravating and through great research and journalism they were picked up. Just searching for “Google apologises” gives back lots of results, because they have had to excuse themselves so many times for their algorithms making horrible associations and of course they were not the only ones.

And many of these errors are errors of classification.

Yeah, yeah. I think that is something really surprising when you are even vaguely familiar with art history or critical library studies. Even if you are not an expert in these fields, you have at least a suspicion that classifying the world is not an easy task. You would not go about it confidently. And yet, apparently this is not the case for some.

C: Some are more confident than others.

Exactly. And it is a learning process that the AI community is going through. I don’t know if you have looked at the ImageNet website at the moment. You have a few images on the front page and for the rest you have absolutely no images at all. It was available until at least two years ago, when I started the PhD research, and then suddenly a lot of categories were emptied out from the images. They only kept a part of the ImageNet dataset online.

Do you know the ImageNet challenge? Every year ImageNet organises a challenge along with other organisations where different companies and universities compete. This particular challenge is famous because it is part of the so-called deep learning revolution: it was one of the first challenges where suddenly the amount of training data was so huge that the deep learning algorithms could really make a difference. Below a certain threshold of training data, they could not really differentiate, but with these millions of images available for training, suddenly they would clearly outperform other algorithms on certain tasks. ImageNet was recognized as an important resource, and through these challenges, it became a referee that would emphasise that one way of doing things was better than another.

The ImageNet challenge was always done with a series of categories which were deemed ‘safe’ by the owners of the dataset. So they were aware, I guess, that at least not all the categories have the same level of precision. Some of them were hugely problematic in terms of representation, identity and so on. For a long time, you had only those approved categories available and others were simply white pages; you could browse the categories but not see the images. Now everything has been taken away. It is like a ruin. It is an empty classification and a very interesting object to look at (I don’t know if look is really the verb for this, because there is nothing to see there.)

It is a very interesting moment, to arrive at this point where a major collection of images that has such an authority in computer vision is just simply emptied out in its public presence. There is a long article where they explain how they are trying to clean their dataset and make a new version of it. They tried to respond to all the problems that they discovered along the way. Surprisingly, it seems that they did not anticipate the difficulty of doing this. You can read this long article in two ways: on the one hand, they have really tried to do something about it, and on the other hand you can measure the distance between this and any project in the cultural world. For example archives of photographs are much more aware of all these problems than ImageNet is. In that sense, it is quite dizzying to read it: you get the impression that some widely established concepts, a critique of the image as a representation for instance, just were not part of their process.

C: There is also a big shift happening now in the field of ethics in AI. There’s a lot of vigorous research going on. It seems that AI research could become more interdisciplinary, but the speed at which this is happening is very slow.

Yeah. You have the impression that there is this super state-of-the-art technology but then you realise it has extremely archaic structures that are used to classify the world behind it. ImageNet is organized with the structure of WordNet. When you look at WordNet, it is really hard to believe that you could use these kind of categories to think about the world today.

Even in their own reflection on how to go further, they talk about WordNet as a stagnant vocabulary. However, the problem is not that it is old. You can see some of these datasets as a kind of preservation of very old structures and how it is not exactly easy to change them. You would struggle to come up with a replacement for WordNet that has the same breath, because you know that everything is problematic. But if you depend on the scale, then you are very tempted to say “let’s try to bring it up.”

I really recommend to read this ImageNet article. It is like peeling an onion: they have seen the problem with slurs and pejorative words, so they take them out. Okay. And then there are these categories that are not problematic per se, but could be problematic when used for detection. So they take them out. Then they have these concepts that are difficult to translate to an image. So they take out some parts and they recommend against using the other part. And at the end what’s left…

R: …is an empty website.

Yeah, probably some tree on the green grass with a blue sky.

R: There are datasets that expose the desire to classify everything versus these very specific datasets which you have mentioned in your presentations – for example image datasets to detect cancer – which are much more narrow in focus than the former. That is another kind of dataset, with a completely different aim.

Of course, that is a very good point. We need to be careful not to treat all datasets as equal, actually. And indeed, the problem of ImageNet is not necessarily a problem that you can extrapolate to every dataset.

There are many datasets that are depending on the fact that their data are photographs. There is an assumption that the fact that things can be photographed means that they can be compared. As if to make a photograph was a transparent act, and therefore the algorithm will learn something from the referent and not something from the photograph. For me that is a common blind spot for a lot of dataset projects. The philosophy of what it means to learn is assertive, the learning seems to be exempt of any reflexivity. For example, there is a whole terminology surrounding the cleanliness of a dataset: not to be tainted, contaminated, and so on. All the agency of data is seen as potential disease or corruption. And that is because the training process does not allow you to learn critically from the data that is fed to the algorithm.

On the one hand, you have a discourse that says, “we have data, it is totally passive and the algorithm mines and extracts the knowledge and makes sense of it.” And then you have the other version of that which says, “actually the data drives the algorithm and therefore it is ‘garbage in, garbage out’.” Then the only thing you can do is to clean the garbage.

But the image world is intrinsically garbage, essentially. When you have cleaned it up, you have nothing left. Nothing worth learning from. Because when you see a photograph, it is all about it being a point of view, it is all about a way of framing, it is embedded in certain technologies. It is marked by how it circulates. So there is no way you can remove all this from the object. It is there, down until the last pixel.

There is absolutely no approach that takes into account just what is specific about a photograph and how it cannot be reduced simply to data and that is a problem. It raises questions not only about having a good or a bad photograph, but simply having to recognize fully what a photograph is. And that probably raises questions about the whole approach and not just about finding the right image, the image that is exempt of any racist bias. I am all for having datasets with less racist bias, but I don’t think it solves the core problem of what it actually means to learn from this. This approach to learning is showing its limits.

Especially for the case of photographs, I see clearly where it relies on the photograph being transparent. But by doing so, the algorithm is unable to cope with what it learns from.

R: Is it not also the inevitability of the author being the one who selects? You mentioned in one of your presentations that the first image datasets consisted of photographs of the authors of the dataset. I recognize this from my research into emotion recognition software. For example: the seven basic expressions of emotion were illustrated by Paul Ekman using photographs of his own face. Even now there are other instances, such as the Stanford dataset which only shows students at Stanford, and is collected by people from Stanford.

So is this cultural bias, or this perspective, is it not inevitable for the whole practice?

I think it is inevitable. And it is not even negative per se. It is only negative once the process will rely on not acknowledging that it is biased. I really like this dataset Faces 1999, which is a self-portrait of the lab. The problem arises when you think that from there, we can generalize. Generalizing in the sense of turning these photos into data and thinking you have extracted something that is common to all.4

There are probably regularities, but they are hugely problematic. And they are worth researching rather than hiding. For instance, Joy Buolamwini who is doing research at MIT, talks about datasets being racist and gender biased, and these dimensions being cumulative. She brought intersectional discourse into the world of computer vision. She made the point very clearly to engineers and as a result, certain companies took her criticism into account and tried to at least reduce some of the bias in their datasets.

First, it is important to say I have a lot of respect for the work she does, but it is all seen through the prism of representation. In her thesis, her point was that if we have a more diverse dataset, we have a more diverse algorithm. This is an important point for an immediate impact on these technologies, but it relies on the assumption that you can have the right representation at all. And that only leads you so far, because at some point representation itself becomes a problem that you need to take into account. At the moment we are nowhere near to having a discussion about that with computer scientists.

R: There’s always something or someone who defies the classification.

Yes, that. But moreover, you never know what you learn from. I’m going back to ImageNet because it is something I know well, but to some extent, a lot of datasets are constructed like this. People make queries on a search engine, they get back a list of photos that as the results and from these photos there is a cleaning stage where Amazon Mechanical Turk workers are employed. This produces datasets that have huge issues which can be framed as representational problems. But all along the way, problems appear: there is the apparatus of search that picks up certain images over others, there are the platforms, the ways in which the image travels online, the ranking and the association of a term with an image. There are so many ways to relate a label to an image which are not about representation per se.

The aesthetics of the images have certain currency on various platforms. In ImageNet half of the images are from Flickr.

C: In the COCO dataset too, there it’s 100%.

So why are certain images prominent in Flickr? When you have these search queries, they pick what the platform promotes. There is much more to that than the representation.

Then there is the layer of Amazon Mechanical Turk, where people are shown thumbnails. They are seeing zillions of images, so they depict them as they can. The population of these workers is a very particular one. They also imagine certain expectations of the employer. All of this may affect how they label the images.

At the end of the process you have an image dataset, but all the parameters along the way are so complex in terms of infrastructure, software, culture, that there is much more at play than than just plurality of representation.

C: As a side note, in one of Anna Ridler’s presentations, she talks about her process of making a tulip image dataset. She bought differently coloured tulips in equal quantities for this purpose. She trained an algorithm on this collection and expected the output to also be equal in terms of colour representation, but it turned out that for some unknown reason the algorithm really liked the colour red above all the other colours, so most of the output images contained red. In order to correct this, she had to skew the percentages of red and white tulips and buy less red ones in order to have more or less equal amounts of red and white tulip variations in the output.

Yeah and it’s super interesting that in her case she controls the whole process so she can oversee this feedback loop between the dataset, the result, going back to the dataset and so on. Whereas when you work with forty million images, even if you are the Stanford director of the AI lab, you do not have the budget for that. There are two or three companies that are able to afford the feedback. They have the infrastructure and the turnover for images that they are able to at least have some sort of cycles. But for the rest of us…

R: That is something we were discussing this morning, that when we look at academic datasets, they are static and almost archives in and of themselves. They are almost like a monument. When it comes to commercial practices however, smaller companies use these datasets pretty much off the shelf, but the datasets of big companies are in flux. They are ever changing. And maybe some of the questions that we bring up with these monument datasets do not apply to them because they are actively adapting to critique. Although, with these you don’t know what you are critiquing anyway since they are not open to the public.

There, one should really be a spy and see how they cope with that. You can still imagine that even if they have some sort of feedback, it is not immediate. What we see with ImageNet is so obvious, but I assume it is a problem that exists in every context because you cannot just use the data out of the box to make a dataset. So you are always in a sort of disconnect from the flow of data. It is an inherent problem.

C: You mentioned the Amazon Mechanical Turk workers. One of the quotes we picked up from your text Introduction to Image Datasets was that the interfaces “of annotation are designed to control workers’ productivity, to find the optimal trade-off between speed and precision”.

This felt relevant to us because we have been thinking about the interfaces at the other end, the ‘exploration interfaces’ that are often used to present certain image datasets. The COCO dataset, or ImageNet dataset for example have one. And they are meant mainly for data scientists and researchers. But the rhetorics of these interfaces are different from those built for Mechanical Turk workers. They steer you with different rhythms.

You build a lot of interfaces to data collections yourself. What role do they play in your practice?

My work on interfaces is really a way to learn by playing. It has always been a mix of trying to understand how certain technologies were working and finding ways to play with them. If I look at what I have done before, I don’t think there was a very critical purpose. It was more about trying to engage through turning things inside out and upside down and seeing how they react. This work I have done with Michael Murtaugh mostly. A lot of our interest was to engage with a sort of alterity of it; to understand data using the kind of mathematics and constructs which are algorithms and their ecology of programs. I spent a lot of time trying to see how the programs could be used to not try to reflect, not try to mimic, human perception. Rather they are attempts to see what was different from human perception in these algorithms.

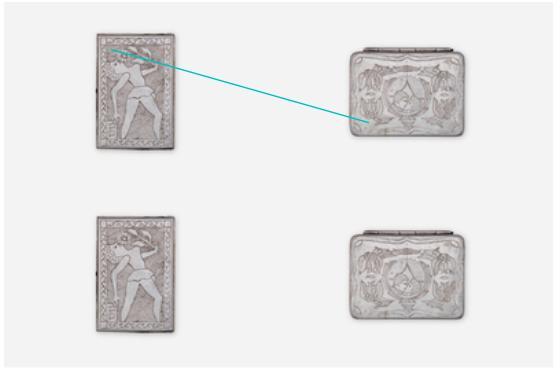

We spent lots of time looking at ‘features’ when we worked with Ellef Prestsæter on the archive of Norwegian artist Guttorm Guttormsgaard. He had this wonderful, extensive collection of photographs, sculptures and objects and we used a feature detector to match similar images. What was interesting to us was which points in these images were actually the points where this algorithm would look for to find key reference points between them. And of course, that would attract your eye to places in images where you wouldn’t even have looked.

I remember this cigarette box on a beige table. There was one point in the middle of this beige where some sort of variation had happened. It was interestingly connecting two images out of the blue. And it was quite stunning.

For me it was super exciting to use these kind of techniques to shift your own perspective and question your assumption of what it means to look at an image. For a few years, I remember that as a really joyous, playful and sort of pleasurable moment where you suddenly have the impression that you can use these algorithms to expand your own understanding of what vision is and not really force a reduced understanding of that to a poor algorithm that has to mimic what you think you know.

That is also something that was really interesting in making the research: little is known about what vision is, even physiologically, it still is quite mysterious. So it is really weird to want a computer to learn vision from humans when we do not know how humans see.

Deep learning has been a shift where suddenly the scale of training was really making a huge difference. It was this idea of having the algorithm as a way of mimicking repetitive forms of looking. Although it was spectacular in terms of it could detect many more things, I really felt it as an impoverishment. There are obvious successes in computer vision today, that is hard to question, but the relation has changed for me. I don’t feel the same pleasure or curiosity today that I felt at that moment. Probably partly because it is not as new as it was – that sort of honeymoon is over – but it is also because the sad understanding of what learning entails. I mean, there is something that makes it difficult to still have a lot of enthusiasm when you see that it depends on people being paid one cent per task.

There is something about it that is so aligned to the scale of let’s say simply capitalism, at its extreme. When you see how people program, they probably spend a lot of time tweaking their algorithms. Essentially, the bulk of the work is done through repetitive actions where people are bored to death doing the same things and they are closely controlled.

C: I wonder what would be a joyful dataset making process would look like.

Anna Ridler’s work is a good example of that. Of course, it is one person’s project, so there are not these problems of scale and dialogue and so on, but you can already see by following her presentation that there are other ways of making a dataset.

The problem I see in deep learning in particular is that of scale. It is super interesting to make an art project where you collect your own tulips, but it only leads you up to a certain point where it does not compare with what you can do with something that is trained with the whole set of Facebook images for example. The algorithm can really do much more with with more data. That creates structurally an imbalance between those who can capitalise on these huge silos and those who have to build them from the ground up. You can always play in a certain context where even a small dataset can make a difference and be clever about it, but there is also this structural imbalance that needs to be addressed and that is super difficult.

R: On the matter of scale, you also mentioned the glance versus the gaze – that the dataset becomes so big there is no time to even consider what is in there. Could you tell us more about that?

I redid experiments which were done when Fei Fei Li was creating ImageNet. At the same time, she was doing research on what it means to look at a scene in a glance. So she was working in the context of cognitive psychology on the speed of vision, especially early vision. She did several experiments and I redid one of them during my research.

Also, I reconstructed an interface from an experiment she did much more recently with other researchers, in which they were asking people to classify images. But the image would not stay on the screen, it was a continuous flow of images. While looking at this flow of images, you are asked to press the spacebar when you see a horse or a dog for example5.